Psychology Professor Jessica Cail Proposes Practical Teaching Practices Amid Growing AI Use

Facing the growing use of AI in classroom settings, a number of educators have opted against modifying their teaching strategies, instead using AI tracking software to discourage students from relying on artificially generated content. However, Jessica Cail, assistant professor of teaching of psychology, is in favor of taking a different approach—encouraging students to develop what she calls “robot proof” skills.

“Policing is not pedagogy. We need to rethink the motivations behind our course material and writing assignments,” says Cail. “Often a student’s goal at the end of the class isn’t necessarily to have a paper built—it's to learn how to build the paper.”

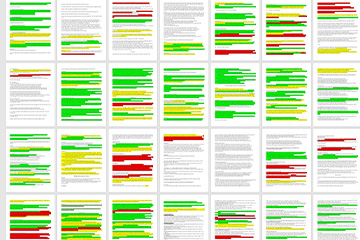

A sample of Cail's data from lower-division students

A sample of Cail's data from lower-division students

In her research study, "Visualization of AI Accuracy: A Novel Assignment for the Teaching of Critical Thinking and Science Writing,” published in the Society for the Teaching of Psychology, Cail explains that while it is impossible to prevent students’ access to AI, educators can implement teaching practices that advance skills such as critical thinking, creativity and, importantly, technological literacy.

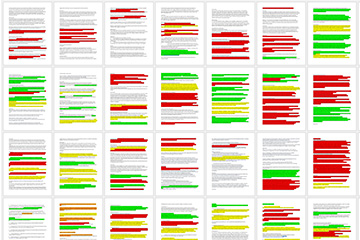

Over the course of three semesters, Cail assigned students in upper and lower division psychology classes to use AI to generate literature reviews on their final paper topics. Each student was then tasked with fact-checking their AI-generated papers for accuracy through a color-coded system. This process involved consulting library catalogs to verify if AI fabricated any information or referenced sources—which is notably a common occurrence.

Running text highlighted in red indicated inaccurate information, yellow flagged accurate but arbitrary material, and green signified information was both accurate and useful.

A sample of Cail's data from upper-division students

A sample of Cail's data from upper-division students

Data revealed that lower-division students, with less experience evaluating scholarly sources, were prone to highlighting more text in green. In contrast, students in 300-level courses and above, rejected significantly more AI produced content, discovering higher amounts of inaccurate or fabricated information and displayed a greater discernment in their evaluations. Cail found that students who believe AI to provide consistently accurate information have a higher tendency to rely on it when drafting their essays and coursework.

“We can’t bury our heads in the sand about AI,” says Cail. “When AI became popular I knew that I needed to be proactive in making my students aware that, while AI can be used as a tool, this technology can never be used to replace human cognition. The color-coding system communicated AI accuracy to my students more than a syllabus could have.”

The results of Cail’s study reinforce her claim that educators need to equip students with a basis of knowledge in order to discern the unreliability of AI. She believes that by helping students obtain basic literacy in AI, they will be more likely to invest in developing their own writing and critical thinking skills, ultimately aiding them as they prepare for graduate studies and the workforce.

Although Cail earned her MA and PhD in experimental psychology from Boston University, she pursued her undergraduate studies in journalism which continues to inform her dedication to cultivating her psychology students’ writing skills and research strategies.

“My whole life has been centered around the idea of finding truth and disseminating truth to the world,” says Cail. “Now as a science teacher, it is integral to society that I teach the next generations of students how to critically think.”